Cooling is often a lower priority than other concerns when server rooms and small to mid-size data centers are initially built. As equipment is added to accommodate growing computing needs, increased heat production can compromise equipment performance, causing costly shutdowns. Quick, unplanned expansion can create unintended cooling inefficiencies that magnify these problems. Data center managers might assume they need to increase cooling capacity, but this is often unnecessary. In most cases, low-cost rack cooling best practices will solve heat-related problems. Best practices optimize airflow, increase efficiency, prevent downtime and reduce costs.

Here are some practical recommendations for increasing data center cooling efficiency:

1. Measure intake temperatures.

Recirculation of hot air can raise equipment intake temperatures far above the ambient room temperature. While knowing the temperature of the room can be helpful, IT equipment intake temperatures are the primary data you should use to make decisions about cooling.

Environmental sensors allow remote monitoring of conditions to provide early warning of potential problems. UPS and PDU accessories, as well as stand-alone sensors that attach to racks, report temperature values remotely, record time-stamped logs and provide real-time warnings when temperatures exceed defined thresholds.

Recording temperature data on an ongoing basis is important because some heat-related problems only reveal themselves when equipment is under heavy use or when environmental conditions change. While it may be impractical to measure the intake temperature of every piece of equipment, taking representative samples from locations throughout your data center can help you identify problem areas. Be sure to get intake readings near the top of rack enclosures, where the temperature is typically higher.

2. Understand the proper role of HVAC systems.

Building HVAC systems are designed for the comfort of people in the building. Adding your data center to your facility’s HVAC system might seem like an easy way to cool equipment, but this is a common misconception. The return air stream may provide a convenient place to channel hot air from your data center, but building HVAC systems have limitations that restrict their use for IT cooling applications.

3. Remove unnecessary heat sources.

Anything that adds heat to your data center will make cooling less efficient. When possible, replace older equipment with newer equipment that uses less energy and produces less heat. For instance, update old light fixtures with cooler LED models and replace traditional UPS systems with energy-saving on-line models. Since people and office equipment are also substantial heat sources, locate offices outside of data centers.

4. Get rid of unused equipment.

When servers reach the end of their life, they often remain plugged in, drawing power and generating heat. To make the problem even worse, older servers tend to be inefficient. Sometimes it seems easier to leave equipment in place rather than risk unplugging a device that someone might still be using. However, taking the time to identify unused equipment can really pay off. Surveying your data center and decommissioning “zombie servers” can reduce energy and maintenance costs significantly.

5. Spread loads to reduce hot spots.

Blade servers and other high-density, high-wattage loads can create problematic hot spots, especially if they are installed in close proximity to each other. A small or mid-size data center with ten 2 kW racks and two 14 kW racks is typically more complex and costly to cool than a data center with twelve 4 kW racks, even though each one has a total of 48 kW of equipment in twelve rack enclosures.

Virtualizing and consolidating projects can also make loads denser and less predictable. In the overall design of these projects, cooling requirements should always be considered. Reducing power and cooling costs provides a greater benefit than saving space, so there is a real advantage to spreading loads when the room is available.

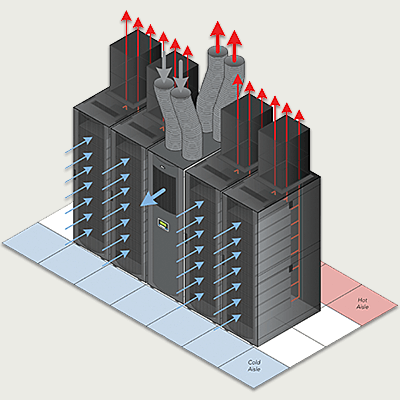

6. Arrange racks in a hot-aisle/cold-aisle configuration.

Separating cold air supply from the hot air produced by equipment is the key to cooling efficiency. Arrange racks in rows so the fronts face each other in cold aisles and the backs face each other in hot aisles. This prevents equipment from drawing in hot air from equipment in the adjacent row and can markedly reduce energy use.

You should also “bay” your rack enclosures by connecting them side-to-side. Baying creates a physical barrier between hot and cold air that discourages recirculation.

To take this a step further, you can achieve basic aisle containment with data center curtains made of plastic strips—like those you would find in a walk-in freezer. Make sure to choose curtains designed to comply with national and local fire codes.

7. Manage passive airflow with blanking panels.

Airflow management provides substantial benefits with little or no expense. Installing simple accessories such as blanking panels, which are often overlooked during initial rack purchases, can significantly improve cooling efficiency. The panels prevent cold air bypass and hot air recirculation through open spaces. Snap-in 1U blanking panels are the best and most convenient type. They require significantly less time to install than screw-in models, and the 1U size fills empty rack spaces evenly. Air tends to follow the path of least resistance, so even a modest barrier can make a big difference.

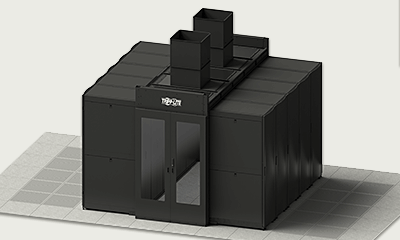

8. Use enclosures instead of open frame racks.

Open frame racks are perfect for some applications, but they offer very little control over airflow. Make sure front-to-rear airflow is controlled by using enclosures with fully ventilated front and rear doors. If rack-level security is not a concern, you can remove the front and rear doors or purchase enclosures without them. Some enclosures may use solid doors to seal the airflow path but will still maintain front-to-rear airflow through ducting. If you must have a glass door, make sure the enclosure permits enough airflow to keep your equipment cool. If you have devices that use side-to-side airflow (common with network switches and routers due to their cabling requirements), install internal rack gaskets or side ducts to improve airflow.

9. Install solid side panels.

Ventilated side panels may seem like they would improve cooling, but in reality they allow hot air to recirculate and cause cooling problems. Solid side panels prevent hot air from recirculating around the sides of the enclosure. Bayed enclosures should have solid side panels between them to prevent hot air from traveling from rack to rack inside the row.

10. Manage cables efficiently.

Unmanaged cabling blocks airflow, preventing efficient cold air distribution under raised floors and causing heat to build up inside enclosures. In raised-floor environments, move under-floor cabling to overhead cable managers such as ladders or troughs. Inside enclosures, use horizontal and vertical cable managers to organize patch cables and power cords.

A sound cable management strategy also reduces troubleshooting time, repair time and installation errors. Remember that a standard-width, standard-depth enclosure might not have enough room for all the cables required in a high-density installation. In such a case, consider an extra-wide or extra-deep enclosure to provide more breathing room.

11. Use passive heat removal with thermal ducts.

Passive heat removal solutions help remove heat from your racks and data center without introducing additional energy costs. You can use a simple, straightforward version of passive heat removal to cool a closed room. Add two vents by a climate-controlled area—one high on a wall and the other on the wall or door near the floor. The hot air will rise, flow out through the upper vent and be replaced with cooler air from the lower vent. Just remember: these vents will require a source of sufficiently cool, clean air from which to draw.

For even better heat removal, connect thermal ducts to your rack enclosures. They will act like adjustable chimneys, routing equipment exhaust directly to the HVAC/CRAC return duct or plenum. Hot air is physically isolated, so there is no way for it to recirculate and pollute the cold air supply. Convection and the negative pressure of the return air duct draw heat from the enclosure, while the HVAC/CRAC system pumping air into the room creates positive pressure. The result is a highly efficient airflow path drawing cool air in and pushing hot air out.

Enclosure-based thermal ducting is also compatible with hot-aisle/cold-aisle configurations and fire codes, so retrofitting an existing installation is relatively painless. It provides a more affordable and practical alternative to aisle containment enclosures, which essentially create a room within a room. Most fire codes require installing or extending expensive fire suppression systems inside these aisle containment enclosures, so costs, delays and disruption can add up quickly.

12. Use active heat removal.

Active heat removal solutions assist passive heat removal with ventilation fans. Reconsidering the high/low room vent described above, you can assist airflow by adding a fan to the upper vent. Hot air is lighter than cool air, so it will rise toward the upper vent, and the fan will accelerate the process.

Active heat removal can also assist in situations where thermal ducts cannot be connected directly to the return duct. Fans attached inside an enclosure pull heat up and out, so you don’t have to rely on the negative pressure of the return duct. Fans can be attached to the roof of an enclosure or in any rack space, with or without thermal ducts. And although these devices use electricity and generate some heat, the draw and heat output are minimal when compared to compressor-driven air conditioners. Using variable-speed fans governed by the ambient temperature may also reduce power consumption.

13. Use close-coupled cooling.

If heat-related problems remain after the implementation of other cooling best practices, a close-coupled cooling system can be a smart and economical choice. It’s an ideal solution when masonry exterior walls interfere with effective heat dissipation, when replacement air is unavailable because the room is surrounded by non-climate controlled areas, when inconsistent building HVAC operations cause potentially damaging temperature fluctuations, or when wattages per rack are simply too high for other methods. Just keep in mind that, in many cases, close-coupled cooling is designed to supplement—not replace—other best practices. Optimizing data center cooling efficiency means combining all viable and compatible options.

Close-coupled cooling systems deliver precise temperature control suitable for IT equipment, yet they provide better efficiency than traditional perimeter and raised-floor systems. They can also improve the efficiency of existing CRAC systems by eliminating hot spots without lowering the CRAC’s temperature setting.

Although close-coupled cooling systems require a larger investment than other cooling best practices, they are still much less expensive than installing or expanding larger HVAC/CRAC systems.

A few different types exist:

- Portable cooling systems roll into place at any time with minimal disruption. They can either cool a room or focus cold air on a particular rack or hot spot through a flexible output duct. These types of systems are available in a variety of capacities.

- Rack-based cooling systems mount inside of a rack enclosure. They require rack space but do not require additional floor space.

- Row-based cooling systems fit inside a rack row, like an additional enclosure, and maintain hot-aisle/cold-aisle separation.

14. Consider what happens during an outage.

If you have long-lasting backup power for your data center equipment, you should consider it for your cooling systems as well. During a blackout, servers and other equipment that are backed up by a UPS system will continue to operate and produce heat, but cooling systems may be without power. Without a plan to provide sufficient cooling during an outage, your systems may shut down from thermal overload long before battery power runs out—especially VoIP systems that require hours of battery backup runtime.

15. Schedule a data center assessment.

As equipment density increases in small to mid-size data centers, lack of planning often leads to heat problems. Although IT equipment does not require meat-locker temperatures to run properly, too much heat or too many fluctuations can have a negative impact on equipment reliability and availability.

Every installation is different, and the ideal solution depends on the site, equipment and application. A data center assessment can help you identify and resolve heat-related problems, so you can determine the ideal provisioning for your space.